The tragedy of corporate-owned proprietary AI

Written on 01 May 2025There’s a lot of skepticism if not downright hostility in the free and open source software community about emerging AI technologies, such as large language models and stable diffusion. Rightfully so, as these technologies have largely been a net negative for society, the software they infest, and many of the people who use them. But I don’t think it had to be that way.

Personally, I don’t think that much of the issues that AI has was inevitable. Sure, there still would have been some problems with AI that would have happened no matter what. The effect that AI has had on society, from people using it to generate wildly inaccurate foraging guides, to using it to write your essay in high school or college would have still happened no matter what. Still yet, AI could have been (and could still shape out to be in the future if we take the right steps now) much better and could have been a general net positive even if it did come with some downsides.

So what is the problem? Proprietary AI owned by for-profit corporations. That is why we are in the mess that we are in.

For-profit corporations and software in general don’t mix well together, as the corporation grows larger it’s focus becomes more laser-focused on making a profit on it’s products rather than delivering you a high quality software. This is how enshitifcation happens and make no mistake, AI is basically just being used by corporations as a marketing ploy for gullible investors and other people that don’t know any better.

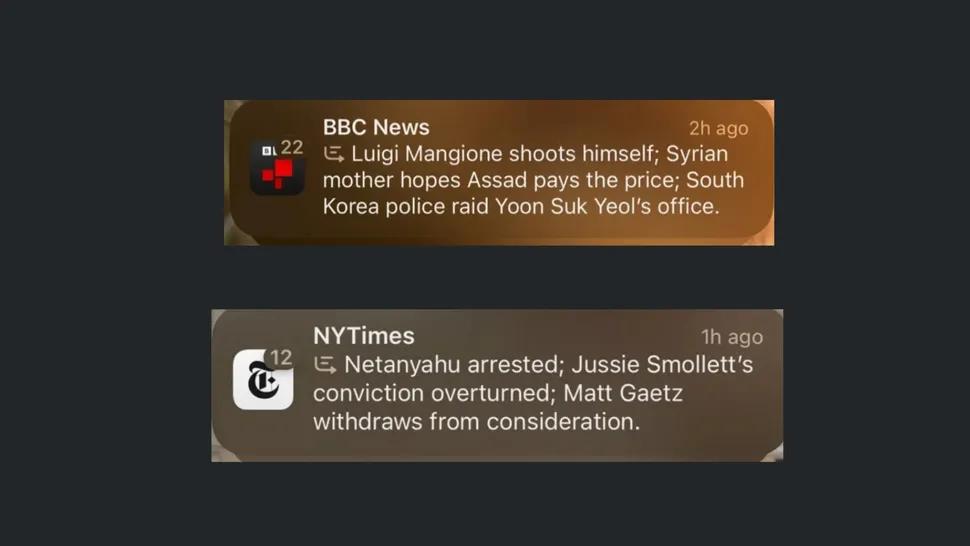

Do they have an incentive to make competent and responsible AI? Absolutely not. In the current state that LLMs are right now, they are not to be trusted any more than your conspiracy theorist uncle on Facebook. Yet, these large companies have pushed their LLMs in such a way that makes them seem more credible than they actually are. While it is impressive how far LLMs have come even just within the past 5 years, they are nowhere near good enough yet to be relying on what they say without fact checking every single thing they say. Do you also rememember how Apple Intelligence months back was giving people dangerously misleading news headlines? In case you need a refresher, here's a little screenshot:

[Image Credit: BBC]

Companies have been pushing AI as a miracle solution, and the average person will often come away with thinking that AI can do more things than it actually can be trusted to do. There is few warnings about the limitations of these technologies, such as how wildly inaccurate LLMs can often be and to fact check everything they say. Implementing a clearly visible warning like this would tarnish the perfect mircale drug reputation that companies are trying to cultivate for their AI chatbots.

There’s also the issue of data usage. Large companies have been known to scrape the internet for data to train their AI with, oftentimes training off material that the original authors have no idea and have given no consent for AI to use. It has also been observed that when AI is integrated into an operating system, that files stored on your device could be used to train AI.

Earlier, GitHub announced that they would be training Microsoft Copilot based on the git repos hosted there. Many of the projects on GitHub use the GPLv2 or GPLv3 licence. If someone was to ask Copilot for code, and the code Copilot generated was sampled from GPL code, and the person asking for such code is developing an application not using a licence compatible with the GPLv2 or GPLv3, than (don’t quote me on this, I’m not a legal expert) the author could have just committed a GPL violation without even knowing. There is often times little way to know where the data that AI is trained on comes from since the data sets are usually not open to the public, until you are able to prove with little doubt that whatever was being generated by AI infact was sampled by you.

However, many of these issues wouldn’t exist if most AI models and chatbots are FOSS and not owned by for-profit corporations. There would be an incentive to provide a high quality piece of software where it’s limitations are clearly outlined. It is always controversial when free and open source software grabs data when it’s not supposed to, so there would be more respect for what data gets to be used to train the AI. It would also be very easy to verify if FOSS AI trained on your data since by the definition of the OSI an open source AI must also have an open source model where you can view all the data that is being used to train it.

This is why properly funded FOSS projects will almost always give you higher quality software than their proprietary equivalents, and AI perhaps needs this treatment the most if AI ever wants to become a high quality product that people actually want to use. AI is perhaps a perfect example on why proprietary software always lends itself to enshitification, because for-profit companies have ruined what are genuinely interesting and quite innovative technologies by using them to chase profit.